Secrets of Growing Your Business: How Dashly’s Growth Team Tests Hypotheses with Clients

For over six months, Dashly’s team has been running growth experiments to improve our clients inbound funnels. We work with companies from various industries, all focused on meeting with leads in their inbound funnels. We’ve become so awesome that we can’t keep quiet any longer 🙂

So, I’ve decided to share our experience and results here.

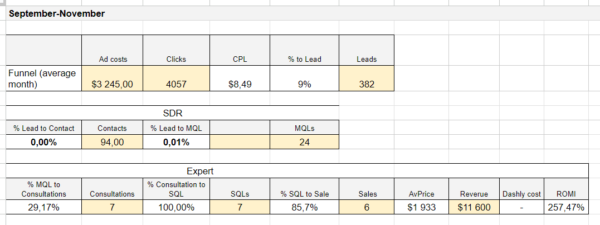

Our growth team works on pilots that last several months. Here are some results from our experiments:

- 75-85% conversion from starting the qualifying quiz to submitting a meeting request.

- 83% open rate for lead nurturing emails leading to meetings.

- 40% conversion to sales in the priority leads group.

Looking at these numbers, I’m super proud of our team. Here they are 👇

Vadim leads the pilots, handles the numbers, forecasts results, and builds experiments. Maria takes care of technical setup and pilot launches. I track whether the client benefits from our experiments, achieves results, and grows. I gather product solutions from our experiments. Together, we find and formulate strong hypotheses that drive key metrics.

Who We Help and How

As mentioned, we work with companies fitting our ICP. Dozens of interviews on the US market show that only 20-30% of leads who submit a form make it to a meeting. When asked what they do about it, we often hear: “We reduce the number of fields in the form and pray.”

Another pain point is understanding the quality of these leads and where they came from.

Our global goal is to double the metrics of the inbound funnel: convert traffic into quality leads, then convert quality leads into meetings, ultimately impacting the ROMI of advertising channels.

Reducing sales team costs is another goal. The average salary of a salesperson in the US is about $6,500 per month. We help save their time for more quality leads and complex tasks.

Not Just an Outsource Growth Team

We believe our success in achieving results is due to how we structure our work:

- Constant Client Contact: We have bi-weekly syncs on experiments and are always available in the chat. Our pilots are a collaborative effort with the client. Together, we set goals and work towards results.

- Transparency and Expectation Management: If actual numbers don’t match expectations, we discuss it with the client ASAP. We figure out what went wrong, how to fix it, and how it impacts our goal.

- Immersing in Client Context: We research the client to understand where the problem and growth points are. Yes, it takes time but helps in the long run.

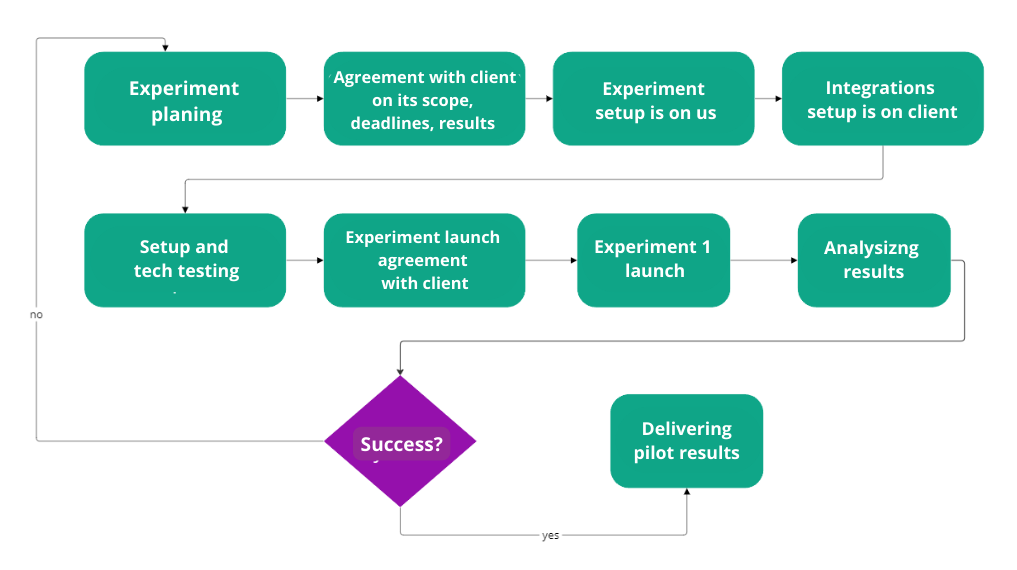

Here’s a rough setup of a pilot 👇

- Data Collection: We analyze six months of client data, including conversions, deal cycles, and training materials for our AI bot. Define clear criteria for key metrics like MQL and SQL.

- Identifying Stakeholders: Determining who in the client’s team makes decisions and who we’ll be working with. This is crucial for effective communication and achieving results.

- Funnel Building: After collecting data, we create a client-specific funnel and define Dashly’s role at each stage, setting expected results in metrics.

- Visualization: Build a basic CJM (Customer Journey Map) on the client’s site and visually show how Dashly integrates at each stage.

- Technical Integration: We set up work with the client’s CMS, CRM, and analytics to always have a holistic and up-to-date view. This includes setting up additional user journey events using GTM, GA, and our analytics. We closely interact with the client’s technical team, especially when working with APIs.

After the setup, we have stakeholders, a clear CJM, numerical goals, and a rhythm for achieving them.

NSM: Launching 2 Experiments per Week

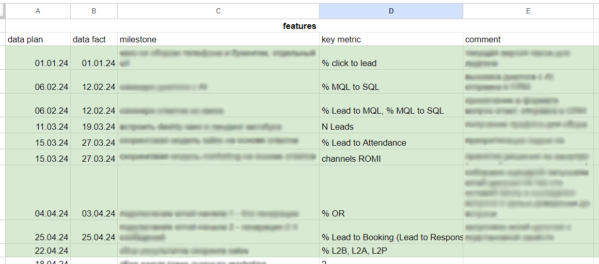

Our North Star Metric as a growth team is the number of experiments launched per week. We track all pilots and experiments three times a week within our team. It also includes experiment goals, and how we will measure the results. Each experiment must be described in metrics, deadlines, and responsibilities. If there’s a participant on the client’s side, we ensure they won’t block the speed and quality of the launch.

The next step is checking if there are any blockers that might derail timelines or results. In about 100% of cases, something goes wrong. Technical issues, unapproved changes, lack of expertise…

Results and Conclusions. Many forget this point. Especially the second part. Results are collected somewhere in analytics, and even numbers are calculated. But formulating why an experiment worked or didn’t is often skipped.

We dedicate an hour to each point on Monday, Wednesday, and Friday. This keeps the rhythm of launching and summarizing experiments.

What a Product Pilot Looks Like

Pilots involve changes to the client’s CJM, which need approval, development, and calculation. Each change is tested for its impact on funnel metrics. We agree with the client on what will be implemented and how it will evolve.

We aim to launch one experiment per week. Initially, we gather the current funnel with metrics to calculate the project’s total profit and value for the client and us. For this:

- We conduct interviews with the client;

- Audit the current CJM;

- Gather the funnel with current metrics;

- Model the metrics and decide with the client where improvements are needed;

- Apply our scenario to this part of the funnel and forecast the change.

Our goal is to double the inbound funnel metrics. We look for strong scenarios (hypotheses). We aim to deliver dramatic results, impacting revenue and ROMI growth. In any pilot, we strive to achieve at least 1000% ROMI from the solution’s cost.

Collaboration with a client

As I said, within our team, we track progress three times a week, discussing experiment processes, deadlines, and results. This is crucial for us.

We collect experiment results with the client and summarize them in a table with clients. It contains a roadmap of experiments and their plan/fact.

The client is involved not only at the goal-setting stage. We jointly form the pilot team on their side, determine roles, responsibilities, and key people for interaction. Since we make significant changes to the funnel, our work can affect many in the team and depends on them as well. That’s why we invite all key team members to meetings.

We have weekly syncs with them to discuss:

- Current experiment status;

- Next steps;

- What we need from the client to proceed;

- Client requests.

Sometimes these syncs are necessary for sharing the current state.

At the start of one pilot, we struggled to achieve results. At some point, we noticed the team was feeling anxious. Such moods can be very demoralizing. We quickly caught this, reflected, and decided to discuss with the client that we hadn’t seen results for three weeks. We arranged a meeting, had an open conversation about our concerns.

Plot twist: the client was also worried about us, fearing that our efforts were not paying off. They even thought the problem was on their side. This reflection was very much needed by both sides. We shared our concerns, bonded, and moved forward.

We still work with this team 🙂 Launching many hypotheses and achieving significant results for the client.

Recent successful hypotheses include warm-up emails and scoring. We increased email open rates from 50% to 89% over a large database. Scoring doubled the conversion to purchase in the priority leads group (14% for priority vs. 6.5% for the regular group).

But, of course, work isn’t always so rosy 🙂

When Testing Doesn’t Go as Planned

First, we find out why. Are we falling short because of insufficient traffic? Bugs? Or is everything working correctly, but the scenario is weak?

Depending on the reason, we act accordingly: either ensure we have enough traffic for statistically significant data, fix bugs, or realize the hypothesis itself is weak after launch.

It’s important to accept that in experiments, things often don’t go as planned.

For example, we spent a lot of time on the concept of an AI SDR to handle initial lead communication and scheduling meetings, freeing up sales time and cutting team costs.

We were very excited about this idea. Invested a lot of effort, time, and money, but the scenarios didn’t yield the expected results.

One was an AI bot meant to hold the lead until a salesperson could connect. We worked on it for a long time: trained it on the client’s knowledge base, tweaked prompts for relevant answers, and scored these answers.

And… it didn’t work.

It’s quite disheartening when you believe in and invest in something that doesn’t deliver the desired result. It’s painful. But if you can draw conclusions about why it didn’t work, it wasn’t in vain.

We realized we needed to shorten the time between lead submission and sales contact instead of having the bot chat with the lead. That’s what we’re working on now.

And so, one hypothesis after another. Is it frustrating?

Absolutely.

Does it make you mad?

Insanely.

But this is growth! You’ll have to get disappointed and curse at 10 spreadsheets where facts don’t match the plan before finding the one hypothesis that gives significant results.

In the next post, I’ll share more about our work on the AI SDR. A long journey of prototypes, consultations, and tests. It was painful but made it especially valuable and well-illustrates the growth approach.

If you want to grow by testing hypotheses, we are ready to take on up to TWO more clients for joint work.

A team with great expertise and a big discount, but the selection is tough. If you have an inbound sales funnel generating leads and a sales team handling them, email me on team@dashly.io.

Let’s discuss your case!

Read also:

Unlock the Power of the Inverted Funnel: A Guide to Upside Sales Growth

Sales Funnel Statistics 2024: Essential Data to Fuel Your Company’s Growth

![21 proven tools for your 2025 marketing tech stack [Recommended by market experts]](https://www.dashly.io/blog/wp-content/uploads/2022/08/martech-stack-999-720x317.png)